In my past experience designing conversational systems, I saw firsthand the limitations of traditional AI. The system I worked with could reliably detect entities, but its rigid logic made scaling these solutions impossible. Conversations followed preprogrammed paths: if the user said X, respond with Y. Any deviation broke the flow, highlighting how inflexible these systems were.

Agents, powered by foundation models, change this.

They’re autonomous systems capable of handling unpredictable scenarios and collaborating seamlessly. An agent can plan a trip, gather real-time data, or manage a customer account, adapting to changes on the fly.

Agents aren’t just users of tools; they’re tools themselves. Like modular components, they work independently or integrate with others to solve complex problems. Predictive models brought precision forecasting. Generative models redefined creativity. Now, Agentic AI takes intelligence into autonomous action.

In this article, we’ll dissect the anatomy of agents, explore their collaboration, and dive into the infrastructure needed to scale them into powerful, interconnected ecosystems.

What’s an Agent?

At its simplest, an agent has agency, they don’t rely on static paths—they reason, use tools, and adapt dynamically. Unlike a scripted bot, agents evolve their workflows in real time, adapting to unpredictable inputs as they arise.

In artificial intelligence, agents have a long history, from early theoretical considerations by Alan Turing and John McCarthy to rule-based reasoning agents in the 1960s. These agents were designed to act autonomously within a defined context, but their capabilities were limited by narrow applications and rigid logic.

Today, the emergence of foundation models has transformed what’s possible.

These models provide the reasoning and generalization needed for agents to adapt dynamically to complex, unpredictable environments. An agent’s environment defines its scope, be it a chessboard, the web, or the road, and its tools determine what actions it can take. Unlike earlier systems, modern agents combine powerful reasoning with versatile tools, unlocking applications that were once unimaginable.

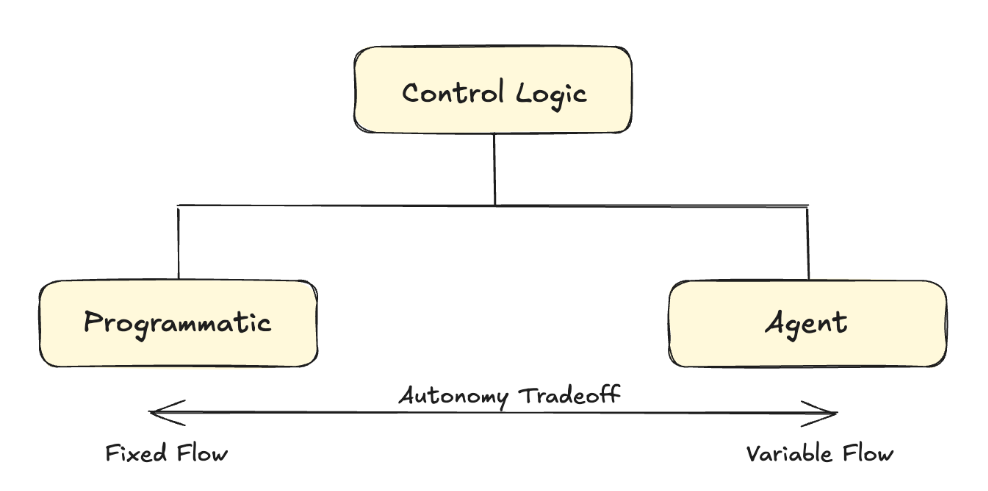

Control logic, programmatic versus agentic

In the next section, we’ll dissect their anatomy—how agents perceive, reason, act, and learn.

Dissecting the Anatomy of an Agent

Just like humans, agents solve problems by combining their senses, memory, reasoning, and ability to act. But before we dive into the mechanics of how they do this, there’s one foundational element that underpins everything: their persona.

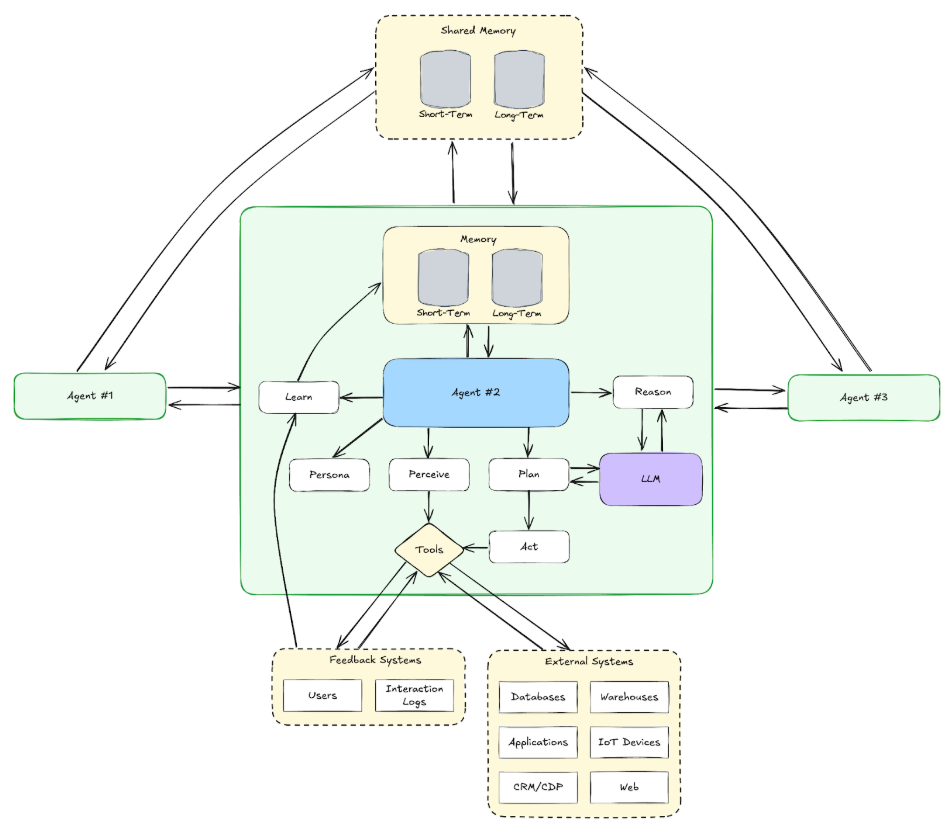

The Anatomy of a Multi-Agent System

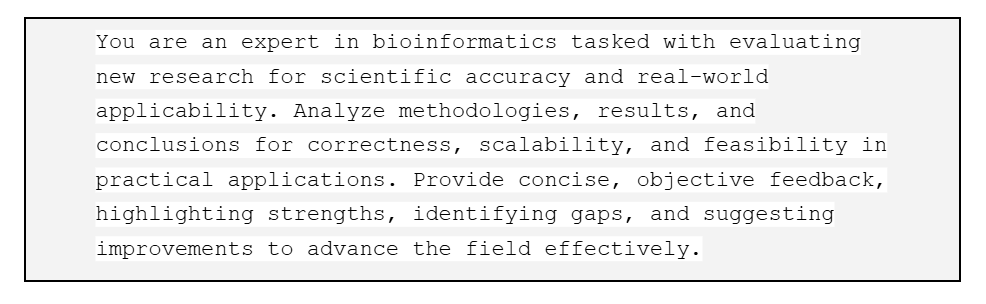

Persona (Job Function)

The persona of an agent defines its job function and expertise. It’s like a detailed job description embedded into the system prompt, shaping the agent’s behavior and responses. The system prompt sets expectations and influences the model’s probability distribution over tokens to align outputs with the defined role.

Example System Prompt:

Perception (Sensing)

With a clear role, the first step to solving any problem is understanding the environment. For agents, perception is their sensory input, that is, how they gather data from the world around them. Humans use eyes, ears, and touch; agents use APIs, sensors, and user inputs.

- Example: A logistics agent senses delays by pulling real-time data from traffic APIs and weather forecasts, much like a human driver checks traffic reports.

Reasoning and Decision-Making

Once information is gathered, it needs to be processed and understood. Reasoning is the agent’s ability to analyze data, derive insights, and decide what to do next. For humans, this happens in the brain. For agents, it’s powered by models like LLMs, which dynamically adapt to inputs and contexts.

- Example: A customer service agent might analyze a user’s tone to identify frustration, cross-reference account history for unresolved issues, and decide to escalate the case.

Memory

Memory allows agents to retain domain-specific information across interactions. It’s not about learning, which is a separate part of the anatomy. Humans rely on both short-term memory (like recalling the start of a conversation) and long-term memory (like remembering a skill learned years ago). Agents work the same way.

Short-term memory allows the agent to keep track of the immediate context within a conversation, which might be stored temporarily in memory buffers during the session. Meanwhile, long-term memory involves storing historical data, such as user preferences or past interactions. This could be a vector database or another permanent storage. A vector database enables semantic search, where embeddings allow the agent to retrieve relevant information efficiently.

- Example: A sales assistant remembers past interactions, like noting a client’s interest in a specific feature, and uses this to tailor follow-ups.

Planning

Once the agent knows what needs to be done, it devises a plan to achieve its goal. This step mirrors how humans strategize: breaking a problem into smaller steps and prioritizing actions.

- Example: A meal-planning agent organizes recipes for the week, accounting for dietary restrictions, available ingredients, and the user’s schedule.

Action

Planning is worthless without execution. Action is where agents interact with the world, whether by sending a message, controlling a device, or updating a database.

- Example: A customer support agent updates a ticket, issues a refund, or sends an email to resolve an issue.

The agent’s execution handlers are responsible for ensuring these actions are performed accurately and validating the outcomes.

Learning

Humans improve by learning from mistakes and adapting to new information. Agents do the same, using machine learning to refine their reasoning, improve predictions, and optimize actions.

- Example: A product recommendation engine tracks click-through rates and adjusts its suggestions based on what resonates with users.

This process may involve adjusting the agent’s context dynamically during prompt assembly, allowing it to refine its responses based on situational feedback without making permanent changes to the model’s weights. Alternatively, learning can also occur through reinforcement learning, where decision-making is optimized using rewards or penalties tied to specific actions. In many cases, adapting context provides a flexible and efficient way for agents to improve without the overhead of fine-tuning.

Coordination and Collaboration

Humans rarely work alone—we collaborate, share knowledge, and divide tasks. In multi-agent systems, coordination enables agents to do the same, working together to achieve shared goals.

- Example: A CRM assistant updates a customer’s contact details in Salesforce while notifying a billing assistant agent to adjust subscription records.

This collaboration is often powered by message brokers like Apache Kafka, which facilitate real-time communication and synchronization between agents. The ability to share state and tasks dynamically makes multi-agent systems significantly more powerful than standalone agents.

Tool Interface

Humans use tools to amplify their capabilities, for example, doctors use stethoscopes, and programmers use integrated development environments (IDEs). Agents are no different. The tool interface is their bridge to specialized capabilities, allowing them to extend their reach and operate effectively in the real world.

- Example: A travel agent uses flight APIs to find tickets, weather APIs to plan routes, and financial APIs to calculate costs.

These interfaces often rely on modular API handlers or plugin architectures, allowing the agent to extend its functionality dynamically and efficiently.

The Takeaway

When you break it down, agents solve problems the same way humans do: they sense their environment, process information, recall relevant knowledge, devise a plan, and take action.

But what sets agents apart isn’t just how they work—it’s their ability to scale.

A human may master one domain, but an agent ecosystem can bring together specialists from countless fields, collaborating to tackle challenges no single system could handle.

In the next section, we’ll explore how to build infrastructure that empowers these agents to thrive—not as isolated tools, but as part of a dynamic, interconnected AI workforce.

Agents as Tools and Microservices

At their core, agents are tools with intelligence.

They can use APIs, external libraries, and even other agents to get the job done. This modularity mirrors the principles of microservices architecture, which has powered enterprise-grade systems for decades. By treating agents as microservices, we can apply the same lessons: design them to be lightweight, specialized, and interoperable. This approach lets us compose sophisticated workflows by combining agents like Lego blocks, scaling capabilities without creating bloated, monolithic systems.

For example, a marketing agent might call a customer segmentation agent to analyze user data and then pass the results to a campaign strategy agent to optimize ad targeting. By treating agents as tools within a shared ecosystem, workflows can be stitched together dynamically, enabling unprecedented flexibility and scalability.

Why This Matters for Scalability

This microservices-like architecture is essential for building scalable agent ecosystems. Instead of creating monolithic agents that try to do everything, we can design smaller, specialized agents that work together. This approach enables faster development, easier maintenance, and the ability to scale individual components independently.

By standing on the shoulders of microservices architecture, we can bring enterprise-grade reliability, modularity, and performance to agent systems. The future of GenAI isn’t about building isolated agents, it’s about creating collaborative ecosystems where agents function like microservices, working together seamlessly to solve complex problems.

In the next section, we’ll explore how to apply the lessons of scaling microservices to agent infrastructure, ensuring we’re ready to support the next generation of GenAI systems.

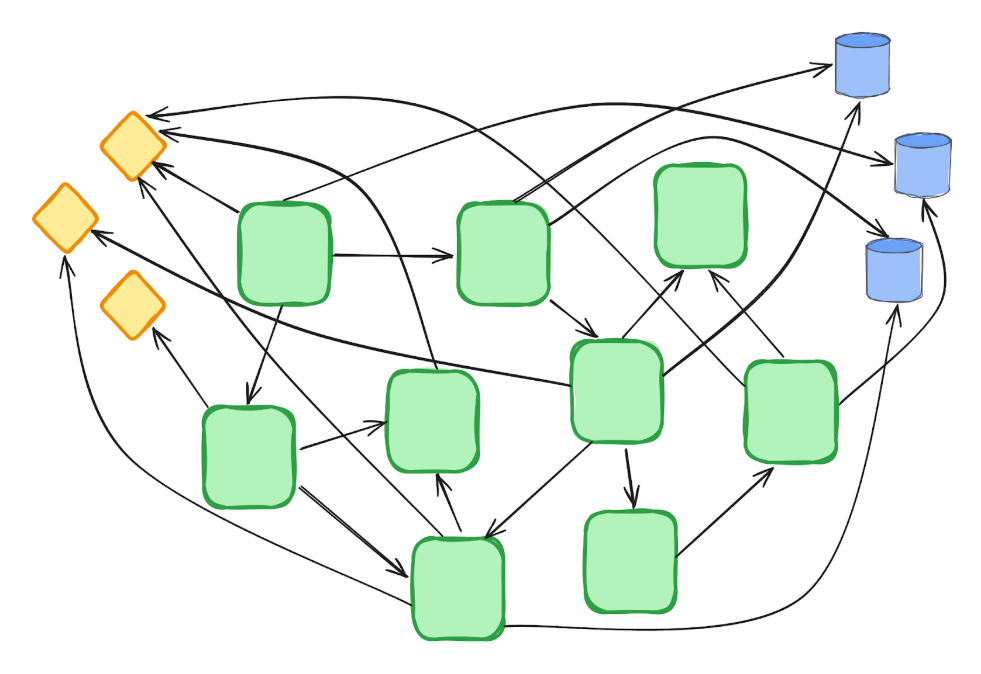

Agents Need Events

Drawing from the lessons of microservices, traditional request/response architectures simply don’t scale for agents.

In these systems, every action requires explicit coordination, introducing delays, bottlenecks, and tightly coupled dependencies. It’s like needing written approval for every decision in an organization—functional in small setups but painfully slow and inefficient as complexity grows.

Multi-agent Systems Lead to a Labyrinth of Tightly Coupled Interdependencies

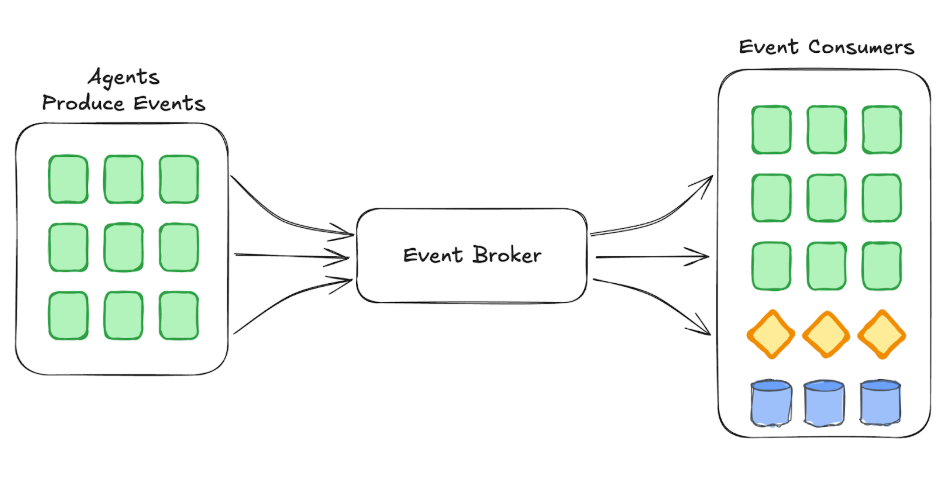

The shift to event-driven architectures marks a pivotal moment in building scalable agent systems. Instead of waiting for direct instructions, agents are designed to emit and listen for events autonomously. Events act as signals that something has happened—a change in data, a triggered action, or an important update—allowing agents to respond dynamically and independently.

Event-Driven Agents: Agents Emit and Listen for Events

The Anatomy of Event-Driven Agents

This architecture directly impacts the components of an agent’s anatomy:

- Perception: Agents sense the world through events, which provide structured, real-time inputs.

- Reasoning: Events drive the decision-making process, with agents dynamically interpreting signals to determine next steps.

- Memory: Event persistence ensures that historical data is always available for contextual recall, reducing the risk of lost or incomplete interactions.

- Action: Instead of rigid workflows, agents act by emitting events, enabling downstream agents or systems to pick up where needed.

Agent interfaces in this system are no longer defined by rigid APIs but by the events they emit and consume. These events are encapsulated in simple, standardized formats like JSON payloads, which:

- Simplify how agents understand and react to changes.

- Promote reusability across different workflows and systems.

- Enable seamless integration in dynamic, evolving environments.

Building the Agent Ecosystem

“Going into 2025, there is a greater need to create infrastructure to manage multiple AI agents and applications.” notes VentureBeat.

This is not just a forecast, it’s a call to action.

The anatomy of agents—perception, reasoning, memory, action, and collaboration—lays the foundation for their capabilities, but without the right infrastructure, these pieces can’t scale.

Platforms like Kafka and Flink are at the heart of scaling microservices. By decoupling services through events, these systems enable microservices—and now agents—to interact seamlessly without rigid dependencies. For agents as microservices, this means they can emit and consume events autonomously, dynamically integrating into workflows while ensuring governance, consistency, and adaptability at scale.

The future isn’t just one agent solving one problem; it’s hundreds of agents working in concert, seamlessly scaling and adapting to evolving challenges. To lead in 2025, we must focus not just on building agents but on creating the infrastructure to manage them at scale.

The post More than machines: The inner workings of AI agents appeared first on SD Times.

from SD Times https://ift.tt/hmErTUg

Comments

Post a Comment