In this article, we will study how the present graphics have evolved over time, thus helping us interact with such great interfaces and enriching our user experience.

1950s

So, the development of computer graphics started in the early 1950s, when projects like Whirlwind and Sage developed the Cathode Ray Tube which was used to display visual graphics and a light pen was used as an input device to write on the board. In 1895, the first video game – Tennis for two with interactive graphics was developed by William Higinbotham to entertain the visitors at the Brookhaven National Laboratory. Later on, many advancements were done in the same decade like the development of a TX2 computer along with sketchpad software which helped to draw basic shapes on the screen using a light pen and saving them for future use.

1960s

The term ‘Computer Graphics’ was coined by William Fetter in 1960. In 1963, E.E. Zajac, of the Bell Telephone Laboratory created a film using animations and graphics which could show the movement of satellites and their change in altitudes around the orbit of the Earth. In the late 1960s, IBM also started its development in this field of computer graphics. IBM released the first graphic computer for commercial use – IBM 2250. In 1966, Ralph Baer developed a simple video game in which one could move points of light on a screen. In 1966, Ivan Sutherland invented the first head-mounted display which contained two separate wireframe images and gave a 3D effect.

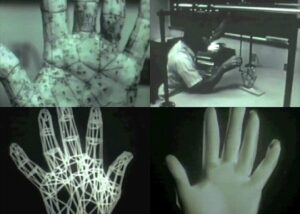

1970s

The 1970s saw a major change in computer graphics that enabled practical computer graphics technology like the MOS LSI technology. Edwin Catmull created an animation of his hand opening and closing. Along with him, his classmate, Fred Parke created an animation of his wife’s face. John Warnock, on of the earlier pioneers, founded Adobe Systems, which, we all know is today one of the most used software for editing and photoshop. This gave a major breakthrough in the field of computer graphics. In 1977, a major advancement in 3D computer graphics was created to draw a 3D representation of an object on the screen, which acted as a foundation for most future developments. Later on, many modern video games were developed like the 2D video game arcade, Pong, Speed game, Gunfight, Space Invaders, etc.

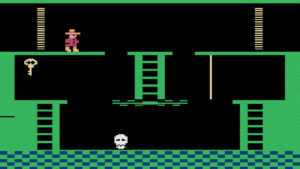

1980s

With such developments taking place, modernization and commercialization started in the 1980s. In the early 1980s, high-resolution computer graphics and personal computer systems began to revolutionize computer graphics. Many CPU microprocessors and microcontrollers were developed to give birth to many Graphical Processing Unit (GPU) chips. In 1982, Japan’s Osaka University developed a supercomputer that used 257 Zilog Z8001 microprocessors to display realistic 3D graphics. The 1980s was called the golden era for videos games. Many companies like Atari, Nintendo, and Sega presented computer graphics with a whole new interface to the audience. Companies like Apple, Macs, and Amiga, allowed users to program their own games. Real-time advancements were made in 3D graphics in arcade games. In this decade, the use of computer graphics was extended to many industries like the automobile industries, vehicle designing, vehicle simulation, chemistry, etc.

1990s

The 1990s was a period where 3D computer graphics flourished and were developed on a mass scale. In this period, 3D models became popular in gaming, multimedia, and animations. In the early 1990s, the first computer graphic TV series – La Vie Des Betes was released in France. In 1995, Pixar released its first serious commercial animated film – Toy Story, which was a huge success, both commercially and in the field of computer graphics too. In the same decade, many 3D games like racing games, first-person shooter games, fighting games like Virtual Fighter, Tekken, and one of the most famous SuperMario began to lure the audience with its interface and gaming experience. Since these advancements, computer graphics continued to be more realistic and kept developing and expanded their use in various fields.

2000s

In the 1990s, video games and cinema became the mainstream of computer graphics. CGI was used for television advertisements widely in the late 1990s and 2000s, and it attracted a large chunk of audience. The wide popularity of computer graphics made 3D graphics a standard feature to be used in most fields. Many improvements were done and computer graphics used in films and videos games were made more realistic to attract the audience on a large scale. Many cartoon films like the Ice Age, Madagascar, Finding Nemo became one of the most favorites of the audience and dominated the box office. Many computer-generated feature films were produced like Final Fantasy: The Spirits Within, Polar Express, and Star Wars which drew a lot of attention at that point in time.

Video games too saw a major upgrade with the release of Sony Playstation 2 and 3, Microsoft Xbox attracting the audience. Many series of video games like Grand Theft Auto, Assassin’s Creed, Final Fantasy, Bio Shock, Kingdom Hearts, Mirror’s Edge, and many other grew the video game industry and continued to impress the masses.

2010s

In the 2010s, CGI expanded its use and provided graphics in real-time at ultra-high-resolution modes in 4K. Most of the animated movies are CGI now and involve animated pictures and 3D cartoons. In video games, Microsoft Xbox, and Sony PlayStation 4 began to dominate the 3D world and are one of the most popular among users in the present time too.

Since then, the world of computer graphics has kept developing and received a lot of praise from the audience for enriching its experience and providing a great platform to interact with high-resolution computer graphics. So, this is all about the history of computer graphics.

The post History of Computer Graphics – 1950s to 2010s appeared first on The Crazy Programmer.

from The Crazy Programmer https://ift.tt/3zeBiBc

Comments

Post a Comment